Introduction

But first the teaser of what's finally built:-2023 certainly feels like the year of AI. Post public reveal of ChatGPT, especially, the reach of this technological revolution has reached beyond the computer folks. My cousin used ChatGPT to help her with articles. David Guetta is trying out AI tools now:-2023 certainly feels like the year of AI. Post public reveal of ChatGPT, especially, the reach of this technological revolution has reached beyond the computer folks. My cousin used ChatGPT to help her with articles. David Guetta is trying out AI tools now:-2023 certainly feels like the year of AI. Post public reveal of ChatGPT,

2023 certainly feels like the year of AI. Post public reveal of ChatGPT, especially, the reach of this technological revolution has reached beyond the computer folks. My cousin used ChatGPT to help her with articles. David Guetta is trying out AI tools now:-

Microsoft just revealed the AI supercharged Bing to take on Google (who are playing catch up using Bard). For the first time, I have felt like a AI technological revolution is truly here at the consumer level.

Trying out something

With all the AI fluff spreading like wildfire, it was only natural to do a hands-on. Call it FOMO or call it curiosity, there are all sorts of projects out there right now built upon Open AI APIs either directly or using replicate or some other API gateway to make it a plug-and-play solution.

fsdfsdf

I thought I might probably try out something around images since it's where my creative interests lie the most. I have certainly played with midjourney, Dall-E and Stable-Diffusion to see the capabilities and midjourney, by far is my favorite.

Coming across articles and code around Q&A with GPT-3

So while exploring and following the AI developments, the quality of life improvement that I feel AI is going to bring is to turn the heat up on Q&A formats that have existed for a long time. Take ChatGPT for instance. It's such a good Q&A assistant. With it's pre trained data, it performs certainly well. Heck, I even used it to ship a line of code to production at Hashnode not so long ago.

The above article on dagster's blog is probably read by many folks till now. TL;DR version :-

This article explains how to build a GitHub support bot using GPT-3, LangChain, and Python. It covers topics such as leveraging the features of a modern orchestrator (Dagster) to improve developer productivity and production robustness, Slack integration, dealing with fake sources, constructing the prompt with LangChain, dealing with documents that are too big, dealing with limited prompt window size, dealing with large numbers of documents, and caching the embeddings with Dagster to save time and money.

GPT-3 is a powerful model or to be more accurate a set of AI models that serve various purposes. ChatGPT is based on GPT-3.5 and so better at stuff than GPT-3. It's soon going to be available as an API as well.

What are the ways to train a GPT-3 model ?

There are several ways to train a GPT-3 model, including fine-tuning, data augmentation, and vector-space search. Fine-tuning involves training the model on a specific dataset, while data augmentation involves providing additional data to the model to improve its accuracy. Vector-space search involves using a search engine to find the most relevant sources for a given query.

A lot of technical jargon again but as far as I understand, there are two ways I like to understand how you can extend a model’s capability to do something for you:-

Fine-tuningaims to train a certain model with pre-trained data to get better at giving accurate answers to the user's provided prompts. Consider you want to fine-tuneGPT-3 model for certain documentation you have been working on. After going through the few articles and skimming through the fine-tuning docs, I got to understand that there are costs involved in fine-tuning a model using OpenAI. Also, models like

text-davinci-003cannot be used right now for it and so only base models can be used. Again, it's the lack of interest from my side here specifically that I haven't explored this fully. This probably might be the way to go to train on production data and get a model that performs exactly what you intend it to.Prompt engineering is the process of developing a great prompt to maximize the effectiveness of a large language model like GPT-3. It's like using a model but making it aware of a limited context and only asking it to answer based on that context. This awareness of context happens at runtime, unlike fine-tuning.

Here is another tweet and linked article I read recently which explores creating a Q&A model for documentation using the same prompt engineering:-

In fact, in its final Shoutouts section, the author has added references to the dagster article I shared above and more.

So I also wanted to explore the prompt engineering side of things. Langchain is one of the terms you would read or hear more about when touching this territory.

Langchain helps developers combine the power of large language models (LLMs) with other sources of computation or knowledge. It includes features like Data Augmented Generation, which allows developers to provide contextual data to augment the knowledge of the LLM, and prompt engineering, which helps developers develop a great prompt to maximize the effectiveness of a large language model like GPT-3.

In this article though, I do not dive into how langchain works. I am not even fully sure of the intricacies of how the math behind a lot of stuff behind all these AI stuff works but at the end of the day, it is all math. But one of the terms you will come across frequently when dealing with input data is embeddings. Embeddings help to represent words in a numerical form and can help us measure the similarities between these words. Think of it as a vocabulary that can fit a lot of words. So for big input data, you convert them into embeddings.

Here's is how I have understood prompt engineering to work:-

Prepare some data you want the AI model to be aware of.

Split that data into chunks of text and convert them into embeddings. (It's good to split data into chunks because OpenAI has limits on how many embeddings can be processed by its model as well but still, that limit is almost twice of if a raw string was given to the GPT-3 competition endpoint directly)

Calculate embeddings for the question and compare those with the input data embeddings. The closest ones are chosen to give us the context or the raw string that can be fed into the completion endpoint.

There is a prompt template involved so that the model can be made aware of what it needs to look for answers in and if it's not able to find it, it can say that it doesn't know the answer instead of giving the wrong one.

Here is how a prompt template might look:-

Answer the question based on the context below, and if the question can't be answered based on the context, say "I don't know"

Context: {context}

---

Question: {question}

Answer:

A web crawler powered Q&A service with OpenAI

In the dagster article, there was a point in their Future work section that stated this :-

Crawl web pages instead of markdown. It would be relatively straightforward to crawl a website’s HTML pages instead of markdown files in a GitHub repo.

This resonated with me and while exploring all this AI stuff, specifically on the openai fine-tuning docs, at the bottom of the page, there is an examples section that contains links to the python notebooks by openai relevant to Q&A. So I went through the notebooks involved, starting with this:-

The above landed me here:-

But I think the most important finding was that openai has these cookbooks essentially. So I went to the root folder to see what all they have and that's where I landed on this:-

The above cookbook was added just a week ago and does exactly what I was wondering about.

In a nutshell, it did the following:-

Crawl https://openai.com/ and generate all the text files for each of the links crawled.

Generate embeddings from all the text files by chunking them first.

The whole question and data embeddings compare step.

Then trying to answer a question via a prompt template (exactly what I mentioned before as an example).

I installed python3 on my system. Then copied the relevant files from GitHub. After a couple of Stack Overflow searches and setting up the OpenAI API key (paid stuff), the program ran and did exactly what was shown in the python notebook online.

Then everything from there was me running the same program for a couple of different sites. Started with the Next.JS blog and docs, my blog and a couple of other sites.

All in all, I refactored the whole code to be more reusable and utility oriented in the following manner:-

Allowing either to form the Q&A context on a single page or the crawled pages via a configurable

recursiveparameter.Modifying the folder and file-naming code to save data for each site that is crawled.

Not crawling or creating embeddings again if already exists for a site and proceeding directly to the answer part.

Creating a minimal flask service to consume the refactored code.

The whole refactor resulted in the following 5 python files:-

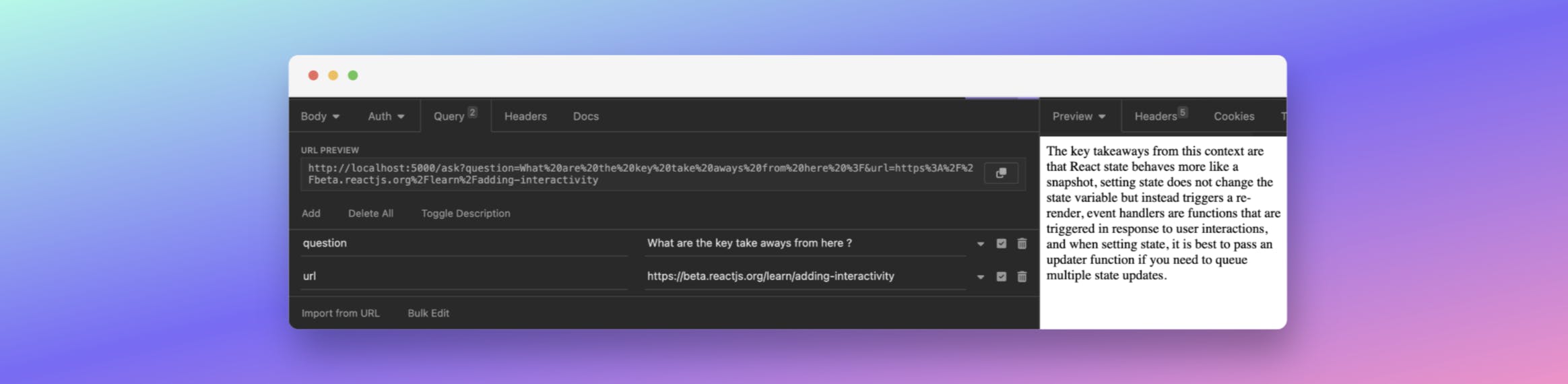

web.py: Managed the whole crawling and saving text files from the crawled links.utils.py: All the utility functions being used across different files.embeddings.py: Managed the whole generation and saving of theembeddings.csvbeing created.answer.py: Managed prompt engineering bit of the code.server.py: The flask service wrapper over our controller function.index.py: The file containing the high-level code that runs when the flask service is hit.# index.py file from web import crawl from embeddings import generate_url_embeddings from answer import getAnswer def train(url, recursive=False): crawl(url, recursive=recursive) generate_url_embeddings(url, recursive=recursive) def ask(question, url, recursive=False): train(url, recursive=recursive) return getAnswer(question, url, recursive=recursive)Playing around with the created API

nextjs.org/blog: This was created usingrecursive:trueso that we can crawl all the links and build the input data from there.Question: In which version of Next.js,

unoptimized:truegot supported insidenext.config.js?Reply by API: Starting with Next.js 12,

unoptimized:truegot supported insidenext.config.js.Question: What are the latest updates to middleware?

Reply by API: With 13.1, you can now return responses from Middleware, as well as set headers on the request. These API improvements give you powerful new flexibility to customize every part of the Next.js routing lifecycle. The

experimental.allowMiddlewareResponseBodyconfiguration option inside next.config.js is no longer required. You can now more easily set headers on the request, as well as respond directly without having to rewrite or redirect.

Fun fact: The TL;DR version of the dagster article I stated earlier was generated by this API and also the answer to the question on how we can train GPT-3 model and the bit about langchain.

https://vercel.com/legal/privacy-policy: Created usingrecursive:falseto just get the text for this page.Question: What is stated about data usage?

We use data to administer and improve the Services, analyze trends, track users' use of the Platform, and gather broad demographic information for aggregate use. We also use Device and Usage Information to generate and process aggregated information, such as statistical or demographic data. We may also track the total number of visitors to our Platform, review Log Data, or track the number of visitors to each page of our Site. We may also use Location Information derived from IP addresses. We do not, however, collect or process End Users’ IP addresses through the use of our analytics Services.

https://vercel.com/legal/terms(recursive:false)Question: Which terms are not in favor of the user?

You agree that violations of this Agreement by yourself or any person or entity acting under your account will, in addition to any other remedies including criminal prosecution, result in the termination of your access to Vercel's website and removal (taking down) of all projects and deployments. In addition, violation of these terms or any of Vercel's policies may result in tracking information being stored to identify the offending person and permanent restriction from holding an account on Vercel's website and Services. We may shut down affected projects or deployments on the hobby plan in case of any delays or performance problems including, without limitation, those caused by a malicious attack on a project or deployment. We will notify you if your usage is unreasonable, and we may shut down and terminate projects and accounts that are creating an unreasonable burden on our infrastructure and/or business operations, as outlined in Sections 9 and 16.1 below. All fees are non-refundable, except as expressly stated otherwise in this Agreement.

Question: Which terms are in favor of the user? Summarize them in less than 50 words.

The user is granted a personal, non-sublicensable, nonexclusive, nontransferable, limited license to use the Services for their internal business or personal purposes. They are also granted a license to use and reproduce their trademarks, tradenames and logos in Vercel's marketing materials and website(s). Vercel will abide by any written trademark usage guidelines provided by the user. Vercel will also provide thirty (30) days prior notice for any changes to the fees or Agreement.

Latest featured article on hashnode - https://iwtyo.today/cognitive-bias-as-a-software-developer (

recursive:false)Question: Create a TL;DR version of this article

Recognizing our own cognitive biases is essential to our lives and work. Egoless coding is a great practice to cultivate, as it helps us to accept and move on from our less-than-perfect code. To identify our skill level, we can ask our colleagues for their honest opinion. We should also break down our core skills into their most basic components and assess our proficiency in each one. Lastly, don't be afraid to seek help if you think you may be suffering from Imposter Syndrome.

Question: What are all the ways to identify skills?

Identifying your skills can be done by assessing your proficiency in each component of your core skill, asking your colleagues for their honest opinion of your capability, contrasting your point of view with others, recognizing the role others can play in helping you improve, building communication and relationships, acknowledging the skills that you have and learning the ones that you don't, and recognizing cognitive biases.

All right. I might have gone overboard by stating the examples but trust me, I have run this service on a lot of sites by now. Thus the bill:-

If you want to play around with the code, feel free to check out the following:-

Limitations

As the partial author of this code, I am not aware of the internal workings of how the embeddings are logically compared. I would say it's a knowledge limitation that can be overcome by someone else who is good at python and general ML stuff or by me if I get into

mathsagain. For me, most of the things are a black box and work on high-level concepts.The speed is a total bummer. It takes minutes to train a

recursive:truesite and40-50sfor arecursive:falseone. Again the query to the completion endpoint with the saved embeddings can take between10-60s. But if you're crawling a 20 mins article then it's not that big a deal.There is a cost associated with the creation of embeddings and query completion but probably much less than fine-tuning.

Sometimes the training isn't good enough and results in a lot of "I don't know" responses even when it shouldn't have. Also, I have seen the model returning wrong answers in a few instances even with this prompt template.

Conclusion

This effort was purely done out of curiosity and touching the AI waters. I for one am excited to try out the Bing chat assist because it fundamentally can do all the above very fast and at a much greater precision. Nevertheless, this was fun to create. Python isn't my work language so my command of it isn't as good. But in the era when there is GithubCopilot, I guess the lack of command gets partially hidden by it.

The transformation of the tech industry by AI is what I am looking forward to.

Thank you for your time :)